Have any questions?

+44 1234 567 890

cerence October 2022

CERENCE CONTROL CENTER

How natural and empathic speech dialog with digital avatars becomes possible

As part of the EMMI project, Cerence created a Control Center that unifies all software components provided by Cerence. We refer to this control center as the Cerence Control Center (CCC). The CCC provides the following five central functions:

- Transkription von gesprochener Sprache, Automatic Speech Recognition (ASR)

- Analysis and interpretation of the recognized words, Natural Language Understanding (NLU)

- Recognition and interpretation of paralinguistic information in speech and the emotional state of users (EMO)

- Creation and control of a dialog with the user (Dialog Manager)

- Synthetic generation of spoken language, Text-To-Speech (TTS)

The diagram shows the interaction of the individual components and clarifies the individual steps that are necessary to provide an empathic, digital conversation partner.

In order to flexibly connect the components and make information accessible and exchangeable between the project partners, a communication interface had to be defined and implemented. Via this interface, the partners Charamel, DFKI, and CanControls can retrieve relevant intermediate results of the speech dialog system and process them independently. The websocket protocol used has the advantage that every component in the EMMI project uses the same communication interface, thus enabling smooth communication between the components and partners.

SPOKEN WORD TRANSCRIPTION, AUTOMATIC SPEECH RECOGNITION (ASR)

The first essential function is the recognition of the user's speech. This function is called speech recognition or ASR (Automatic Speech Recognition) for short. The speech recognition component in the CCC picks up the audio signal from the microphone, extracts the spoken language and converts it into text. For this purpose, language-specific models are used in the background.

The integration of speech recognition in the CCC was implemented in such a way that the microphone is always activated. A dialog can be initiated by the user with any wake-up word (WuW). Thus, the system continuously listens to hear if a phrase defined as a WuW is spoken and then interprets the subsequent words as input to the system. In addition, continuous listening is important for the emotion recognition component. Thus, it is possible to infer an emotion from the incoming audio signal even if no voice command was spoken. But more about that later.

ANALYSIS AND INTERPRETATION OF SPOKEN WORDS, NATURAL LANGUAGE UNDERSTANDING (NLU)

In the second step, the result of the speech recognition is passed to an NLU module. This analyzes the spoken words and a semantic representation is obtained from the text. For example, filler words can be filtered out and paraphrases for commands can be standardized to a central user intention. For example, the instructions "Please drive me to Cologne" and "I would like to have a navigation to Cologne" can be unified to the same "intent". This makes further processing easier, since it is no longer necessary to pay attention to different formulations, but only the user's wish is used.

RECOGNITION AND INTERPRETATION OF PARALINGUISTIC INFORMATION AND EMOTIONAL STATE OF USERS

The Cerence Control Center's paralinguistic and emotion state analysis component offers the possibility of recognizing emotions and other paralinguistic characteristics (so-called traits) in the voice of the speaker through speech-based analysis. In the background, various voice characteristics are compared with specially trained, probability-based models in order to continuously make a decision about the current state. The displayed values are not only available within the CCC, but can also be retrieved via the EMMI websocket interface, so that information can be passed on to other systems as required.

Care was taken to use a flexible architecture. For example, functionality was developed that allows the user to freely set the number of detections per second. This is especially useful for doing research in the area of emotion time windows. This can provide us with valuable insight into what length an audio window should be in order to robustly and reliably detect an emotion.

CREATION AND CONTROL OF A DIALOG WITH THE USER (DIALOG MANAGER)

A dialog manager is required to enable empathetic interaction with the user and to provide the user with the desired information. It receives the recognized "intents" of the NLU component and decides, based on these, how the dialog with the user should be continued. These options can be easily entered and extended with the Dialog Manager integrated in the CCC. It supports not only direct responses but also more complex sub-dialogs and can control any connected systems via a network interface. For example, the vehicle's window could be opened to let fresh air into the vehicle, or the lighting mood could be adjusted to create a calming effect on the driver.

The Dialog Manager thus forms the basis for intelligent conversation and is also the interface to all other connected systems, which both supply data to the Dialog Manager and perform the actions requested by the user.

THE SYNTHETIC GENERATION OF SPOKEN LANGUAGE, TEXT-TO-SPEECH (TTS)

In the Text-To-Speech component, the Cerence TTS engine can output words or sentences entered in the text field as computer-generated speech. It is also possible to adjust the volume, speed, pitch and timbre of the generated speech according to the user's preferences. This is important because the sound image has a great influence on the perceived empathy of the digital dialog partner. It is also possible to have the language generated in German and English. These functions can also be called via the EMMI websocket interface.

In cooperation with the consortium partner Charamel, it was decided that the creation of computer-generated audio files should be done as a service, which means that it can be easily queried and returns the results. Not only is the pure audio signal generated, but also Lipsync information so that a digital avatar can move its lips in sync with speech.

CERENCE CONTROL CENTER - A VOICE INTERACTION SYSTEM FOR EMPATHIC HUMAN-MACHINE INTERACTION

Voice interaction systems have been around for some time and have proven to be a successful human-computer interface. They are a great way to interact with complex technical systems in a natural and human way, but there are still some challenges that need to be addressed.

One of the problems is the emotionality both in the speech output, within the dialog, and the recognition of the user emotion which has a great impact on the trust towards a system. This is where the CCC comes in as a solution. It is a flexible toolkit that uses deep neural networks to recognize spoken language, detect emotions, and respond accordingly.

The CCC is a speech interaction system that enables us and our project partners to interact with machines in an empathic way. It forms a construction kit that provides all relevant intermediate results, which in turn can be used by the partners to create and improve their own speech interaction systems. All with the goal of being able to empathize with the user and thus build trust and make the interaction natural and intuitive.

ika, CanControls September 2021

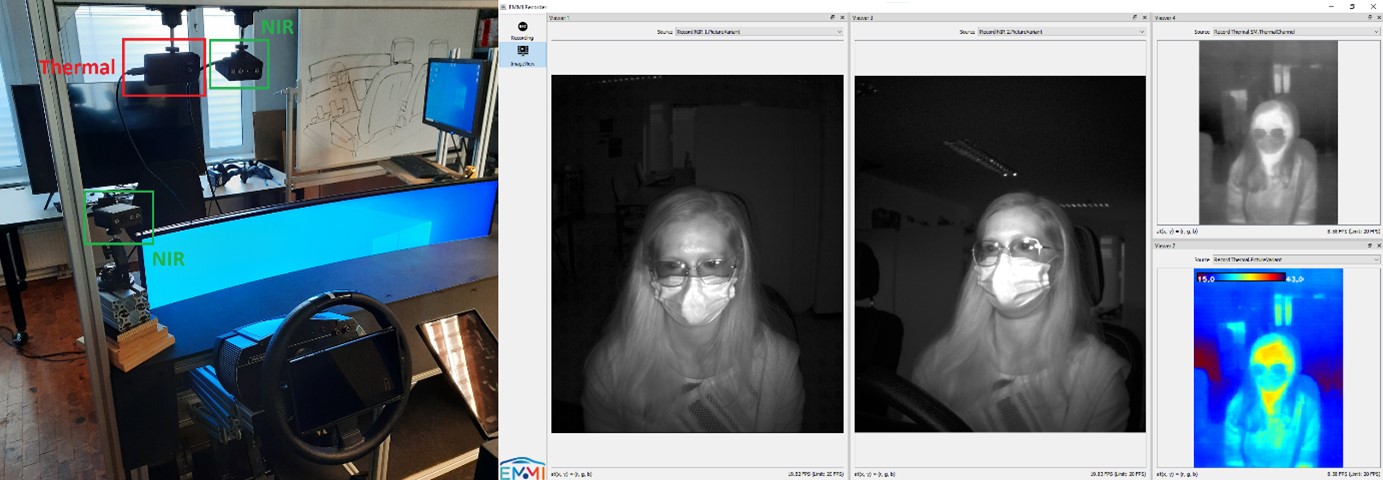

In September 2021, our EMMI interior vehicle mock-up was built and presented at the Interior Vehicle conference. In this configuration, the modular structure has three integrated displays. However, due to the modularity of the setup, other configurations can be deployed and tested at any time. The hardware of the EMMI partners has also already been installed in the interior vehicle mock-up. For emotion recognition, studies are now being conducted with test subjects using the setup and data is being collected.

ika December 2021

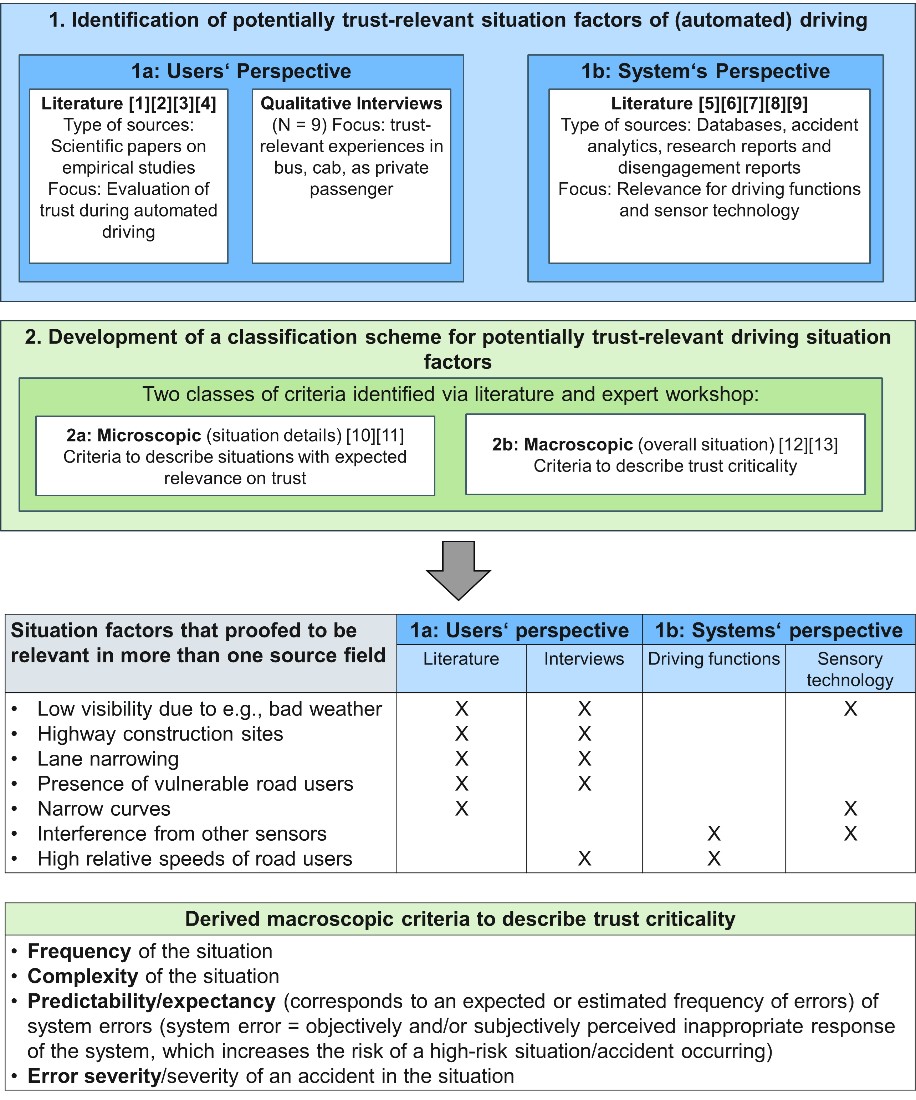

Paper published! The paper examines the less frequently studied external situational factors. In order to better understand, describe, and classify potentially trust-relevant situational factors in terms of their criticality for trust, literature research, interviews, and an expert workshop were conducted. The result is a structured listing of potentially trust-relevant driving situational factors as well as criteria for the macro- and microscopic description of these situations and situational factors. The potentially trust-relevant situational factors and criteria derived theoretically in the context of the present work will be verified and, if necessary, extended in the context of a planned on-road study.

Read the paper here

ika January 2022

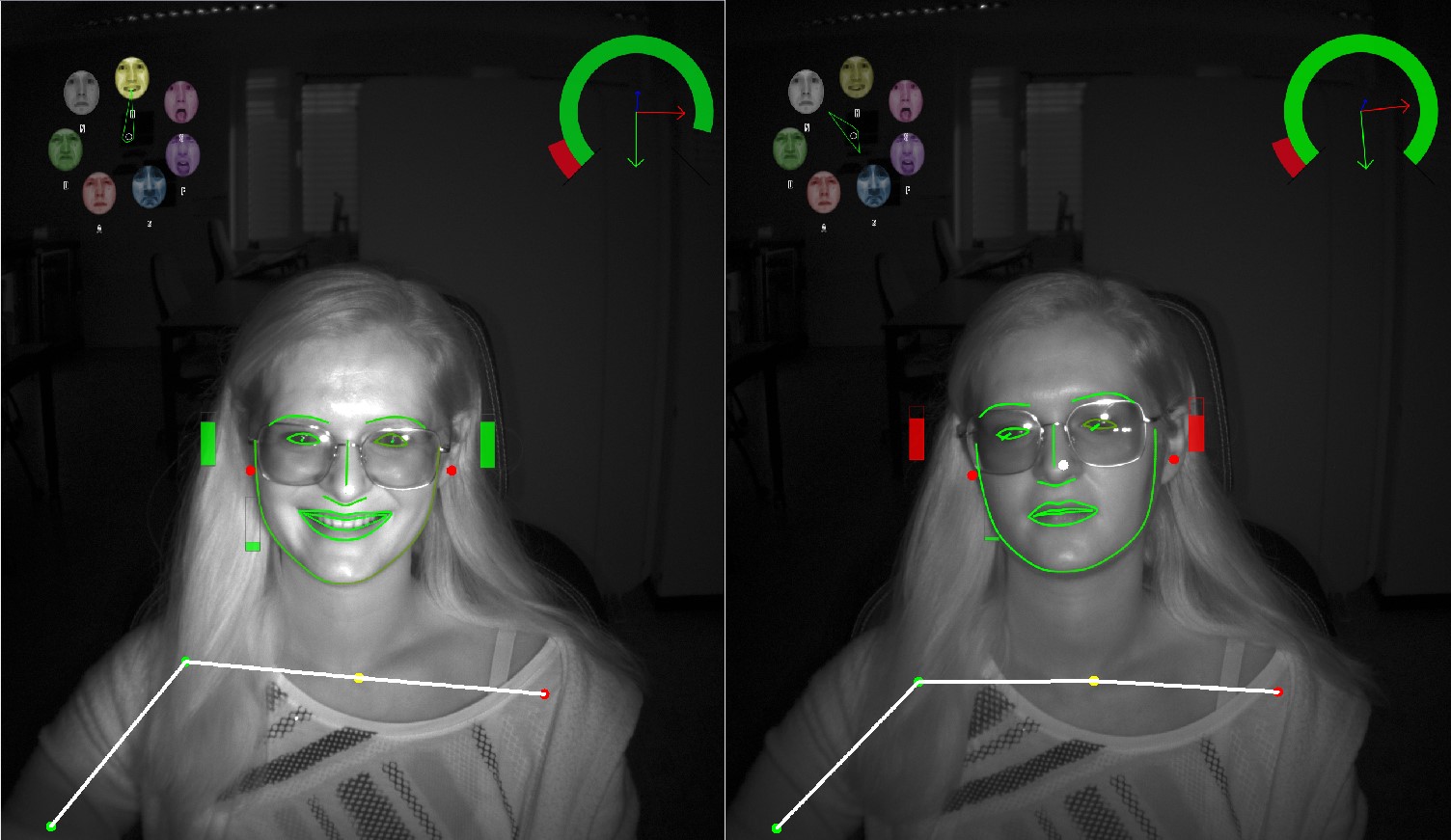

For many future users, the idea of being exposed to automation without being able to influence the driving behavior of the vehicle is frightening. In EMMI, we want to change that. One approach of the EMMI project to increase trust in the automated vehicle is to make the automated driving controllable. To this end, we have developed a gesture concept that can be used to communicate adjustments to the automated journey. For example, the distance to the vehicle in front, the acceleration behavior or the position within the own lane can be changed. The gesture concept is based on simple sensor technology in the form of RGB cameras. In addition, the direction of gaze is analyzed in order to assign the detected gesture to a specific user request.

ika, DFKI, cerence, CanControls, Charamel, Saint-Gobain Sekurit April 2022

Paper published! In order to further disseminate the idea and the solution approaches of the project and to make them available to the interested expert audience, the consortium has jointly written a scientific overview paper on the overall project. The paper describes the individual solution modules, which should help potential users of future automated vehicles to better understand the system behavior and thus be able to trust their vehicle. The psychological foundations of hybrid trust modeling are also described in the paper. Of course the paper is freely available, you can also find it on the download page right here on the homepage! Feel free to read it!

EMMI-Roadmap:

ika, CanControls July 2022

Our EMMI test vehicle is finished foiling and was unveiled at IEEE Intelligent Vehicles in July 2022. At the end of the year, we will use it to conduct subject studies to investigate confidence in automated driving in specific situations. Hardware and software from our partners was also installed in the research vehicle. We will use CanControls' software tool to perform gaze behavior, heart rate and audio recordings in the vehicle. We are excited to have the vehicle on the road now. Keep your eyes peeled for it!

ika July 2022

DOI http://doi.org/10.54941/ahfe1002462

We propose a theoretically derived framework on the relationship between trust in automation (TiA) and technology acceptance considering influencing factors of TiA for the application context of automated driving. The impact of trust on the acceptance of automated systems seems to be empirically proven. Nevertheless, acceptance models often do not consider the concept of trust or neglect the influencing factors of trust. To provide a more holistic perspective on these issues, we conducted a structured literature analysis. Scientific papers which consider the relationship between TiA and acceptance as well as factors influencing trust in the context of automated driving were included. Based on the identified literature, a theoretical framework was derived. The framework is intended to serve as a complement to existing sound acceptance and trust models as well as a starting point for empirical verification of the theoretical assumptions in the course of further research.

ika October 2022

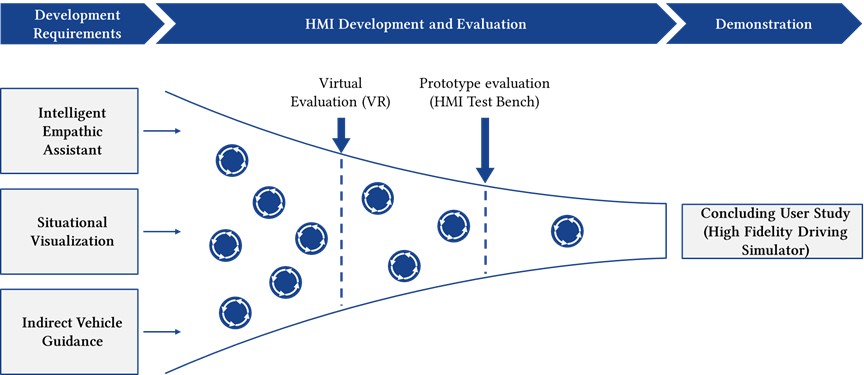

VR-Environment

As part of the EMMI project, a virtual environment was created at the ika using the Unity development environment, in which HMI concepts can be implemented and evaluated at an early stage. Here, an automated journey is mapped that includes some trust-critical situations, such as people on the roadway or intersection situations. This drive can be experienced either in virtual reality with VR goggles or in the interior vehicle mock-up constructed for the project. An expert study will test whether the situations are perceived more trust-critical in VR or in the interior vehicle mock-up. Additionally, visualizations that could increase trust in automation will be asked for. Subsequently, the concepts for influencing trust in automation will be implemented in the virtual environment and tested for their effectiveness.